Google recently announced the release of VideoPoet. It’s an AI assistant capable of generating high-quality videos from various inputs like text, images, and audio. In this in-depth guide, we’ll explain what VideoPoet is, how it works, and explore its many useful capabilities.

An Overview of Google’s VideoPoet

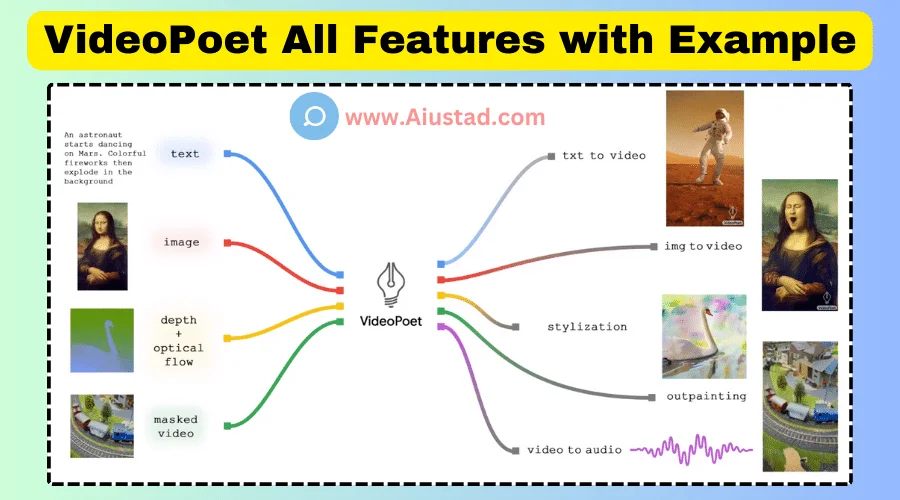

VideoPoet is an AI model developed by Google that uses deep learning to generate video content. Some key things to know:

- It’s a Multimodal model that can handle multiple types of inputs like text, images, video, and audio to produce video output.

- VideoPoet employs a Decoder-only architecture similar to DALL-E and ChatGPT for flexible generation.

- It’s been Trained on billions of images and millions of videos to understand complex visual and motion concepts.

- The core model allows for various generative tasks like text-to-video, image-to-video, video stylization, and more.

- Google aims to integrate VideoPoet capabilities into products to enhance creativity and media production workflows.

In essence, VideoPoet represents a major step in AI’s ability to understand and generate high-fidelity video content from sparse or incomplete inputs. Let’s explore its powers in more detail.

Capabilities of VideoPoet By Google

Google’s latest AI model has various amazing features that can easily stand high in the latest market trends

Text-to-Video Generation

One of VideoPoet’s most fascinating skills is generating original videos with only a text prompt. You can describe a scene, story, or idea, and VideoPoet brings it to life visually in high-quality 16fps video format.

Early examples show it can capture setting details, character actions, movement, and plot points from just a few sentences. The possibilities for screenwriting, storytelling, and content creation are immense.

Image-to-Video

Feed VideoPoet a single image, and it analyzes visual concepts to envision how that scene might unfold over time as a moving image sequence. Early results smoothly animate still scenes with realistic motion, pacing, and cinematography.

Stylization

Stylization refers to VideoPoet’s power to render input videos in various art styles and visual effects simply by describing the desired style in a prompt. This allows animation videos in the distinctive look of different artistic genres or fantasy visual treatments.

Inpainting and Outpainting

VideoPoet uses generative modelling to complete missing frame portions guided by surrounding pixels. The model learns natural image and motion flow patterns to blend additions convincingly into the original footage.

Video Editing

Compared to contemporary tools, VideoPoet stands out because it can generate videos with many coherent shots over extended durations (30+ seconds currently). This enables use cases like automatic highlight reels or recaps from long-form footage.

While there are limitations in fully replacing human creativity or precision video work, Google’s results demonstrate the huge potential for assisted video production using these generative AI techniques. And VideoPoet’s capabilities are likely to continue advancing.

How VideoPoet Works

To achieve its multimodal abilities, Google equipped VideoPoet with several key technical elements:

- MMVT-2 Video Tokenizer – Encodes video frames into discrete semantic tokens for the model to understand. This MAG version improves on prior work.

- SoundStream Audio Tokenizer – Similarly encodes audio waveforms into tokens the model understands for audio generation tasks.

- Autoregressive Language Model – The core BERT-like Transformer model sequentially predicts future video/audio tokens based on prior context (inputs and partially generated outputs).

- Multimodal Pre-Training – VideoPoet leverages state-of-the-art techniques like MAE to learn joint representations from massive multimodal datasets before fine-tuning for specific tasks.

- Decoder-Only Architecture – Unlike most generative models trained to encode and decode data, VideoPoet only needs to learn generation, allowing novel combinations of inputs and outputs.

This sophisticated architecture gives VideoPoet a unified understanding of the relationship between different media types, allowing it to translate between them seamlessly for various generative tasks.

Click Here to Explore Meta AI & it’s Features

Frequently Asked Questions about VideoPoet

Is VideoPoet available for everyone to use yet?

No, VideoPoet is still in early research and development phases. Google has not publicly released an interface or API for general users yet. But they may integrate capabilities into products like Search and Android over time.

What types of videos can VideoPoet generate?

Currently, VideoPoet focuses on generating portrait orientation videos at 16 FPS. But it can also work with square video and generate soundtrack audio to accompany clips based on visual inputs. As it advances, support for more formats will likely be added.

How long of a video can VideoPoet generate?

The longest continuous video sequences demonstrated so far are around 30 seconds. However, VideoPoet excels at multi-shot video editing within that timeframe by incorporating varied camera angles, transitions, and scenes coherently.

Is the video quality good in videopoet?

Based on examples shared, VideoPoet produces videos with high visual fidelity and realism for key attributes like scene settings, character motions, facial expressions, and dynamic camera work. However, its resolution is still limited compared to professional filmed content. As with all generative models, there may also be unintended artefacts sometimes.

Can VideoPoet’s output be used commercially?

Since VideoPoet is an experimental research project and not publicly released, any generated content should not be assumed copyright-free or able to be monetized without permission from Google. Commercial and non-research use would require negotiating explicit licenses once the technology matures.

How does VideoPoet compare to other AI video tools?

Many existing tools focus narrowly on one task, like text-to-video or video enhancement. VideoPoet stands out for its multimodality, longer-range video coherence, and quality across a diverse set of generation capabilities beyond any single competitor currently. However, it is still early in development compared to more polished products advancing the field.

Key Takeaways

- VideoPoet is Google’s breakthrough multimodal AI model capable of generating high-quality video from text, images, existing video clips and more.

- It employs sophisticated technical approaches, including MMVT-2 video encoding and autoregressive language modelling, to achieve this broad range of generative abilities.

- While not publicly available, VideoPoet demonstrates huge potential for assisting with video creation, editing, and other media workflows through high-fidelity computer generation.

- As one of the most advanced video-focused AI tools, It points towards a future where generative algorithms can automate, augment and enhance many stages of video production.

- As the technology matures through ongoing research, VideoPoet’s real-world applications will likely emerge integrated within Google’s products and services.

1 thought on “What is Google VideoPoet? A Comprehensive Guide”